How upload data to MySQL from a CSV file?

I am trying to create a node to upload data to my MySQL table, using a CSV file as source. My code is this:

import mysql from 'mysql2/promise';

import {

createReadStream

} from 'fs';

import {

parse

} from 'fast-csv';

export default async function loadCsvToMySql({

host,

database,

username,

password,

fileName

}) {

const connection = await mysql.createConnection({

host,

user: username,

password,

database,

});

const fullFilePath = process.env.BUCKET_FOLDER_PATH + "/" + fileName;

// Definir a função streamFactory

const streamFactory = () => createReadStream(fullFilePath);

await connection.query(

[ ],

{ streamFactory }

);

const [rows] = await connection.query('SELECT COUNT(*) AS count FROM shapes_aux');

await connection.end();

return {

count: rows[0].count

};

}

But I am receiving this error:

"error": "As a result of LOCAL INFILE command server wants to read arquivo.csv file, but as of v2.0 you must provide streamFactory option returning ReadStream."

I couldn´t find a solution. Can someone help me? Thanks!

import mysql from 'mysql2/promise';

import {

createReadStream

} from 'fs';

import {

parse

} from 'fast-csv';

export default async function loadCsvToMySql({

host,

database,

username,

password,

fileName

}) {

const connection = await mysql.createConnection({

host,

user: username,

password,

database,

});

const fullFilePath = process.env.BUCKET_FOLDER_PATH + "/" + fileName;

// Definir a função streamFactory

const streamFactory = () => createReadStream(fullFilePath);

await connection.query(

LOAD DATA LOCAL INFILE '${fullFilePath}' INTO TABLE shapes_aux FIELDS TERMINATED BY ',' ENCLOSED BY '"' LINES TERMINATED BY '\n' IGNORE 1 ROWS[ ],

{ streamFactory }

);

const [rows] = await connection.query('SELECT COUNT(*) AS count FROM shapes_aux');

await connection.end();

return {

count: rows[0].count

};

}

But I am receiving this error:

"error": "As a result of LOCAL INFILE command server wants to read arquivo.csv file, but as of v2.0 you must provide streamFactory option returning ReadStream."

I couldn´t find a solution. Can someone help me? Thanks!

Solution

Hi @Hamilton T da Silva,

I'd start by breaking this up into at least 2 nodes: one to handle import from csv and a second for loading to the DB.

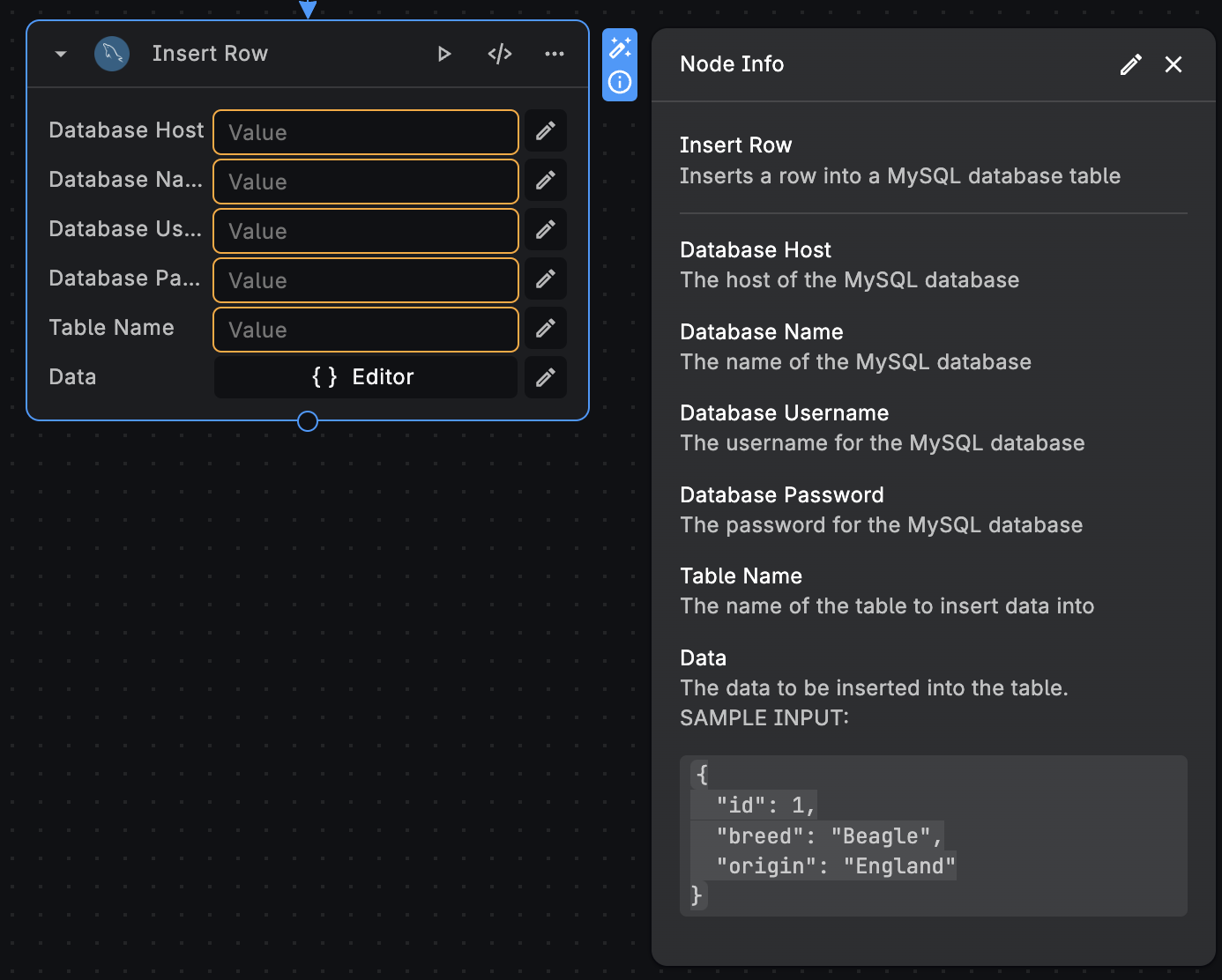

My preference would be to import from the csv to JSON and then load the JSON via the SQL Insert row node (in the attached screenshot).

You could use something like this to convert a line from the csv to JSON:

import { Storage } from '@google-cloud/storage';

import parse from 'csv-parse/lib/sync'; // Import the sync parse method

export default async function readCSVfromGCPStorageBucket({ folderPath, fileName }) {

const storage = new Storage();

const bucketName = process.env.BUCKET;

const filePath = {folderPath}/{fileName};

const bucket = storage.bucket(bucketName);

const file = bucket.file(filePath);

// Download file contents into memory

const contents = await file.download();

const contentString = contents.toString('utf-8');

// Assuming we're reading just one line and it includes headers

const records = parse(contentString, {

columns: true, // Automatically infer column names from the first row

skip_empty_lines: true, // Skip empty lines

from_line: 2, // Skip the header row, start with data

max_record_size: 1 // Read only one record

});

// Convert the first record (if it exists) to JSON

const json = records[0] ? records[0] : null;

return json; // This will be your JSON object from the first line of data (excluding header)

}

Of course this is only a starting point and YMMV - I have not tested this flow, but happy to work with you if you need further help.

HTH.

Martin

I'd start by breaking this up into at least 2 nodes: one to handle import from csv and a second for loading to the DB.

My preference would be to import from the csv to JSON and then load the JSON via the SQL Insert row node (in the attached screenshot).

You could use something like this to convert a line from the csv to JSON:

import { Storage } from '@google-cloud/storage';

import parse from 'csv-parse/lib/sync'; // Import the sync parse method

export default async function readCSVfromGCPStorageBucket({ folderPath, fileName }) {

const storage = new Storage();

const bucketName = process.env.BUCKET;

const filePath = {folderPath}/{fileName};

const bucket = storage.bucket(bucketName);

const file = bucket.file(filePath);

// Download file contents into memory

const contents = await file.download();

const contentString = contents.toString('utf-8');

// Assuming we're reading just one line and it includes headers

const records = parse(contentString, {

columns: true, // Automatically infer column names from the first row

skip_empty_lines: true, // Skip empty lines

from_line: 2, // Skip the header row, start with data

max_record_size: 1 // Read only one record

});

// Convert the first record (if it exists) to JSON

const json = records[0] ? records[0] : null;

return json; // This will be your JSON object from the first line of data (excluding header)

}

Of course this is only a starting point and YMMV - I have not tested this flow, but happy to work with you if you need further help.

HTH.

Martin